In computer networking, the end-to-end principle is a design guideline that suggests certain functionality—such as reliability—is best handled at the endpoints rather than in the intermediate nodes of a network.

The reasoning is straightforward: only the end nodes can truly define and verify reliability, so any attempt by the intermediate nodes of a network to enforce it is redundant. Since the network serves as shared infrastructure, it’s better used to provide functionality that only it can deliver, rather than duplicating what endpoints must do anyway.

Often summarized as “dumb pipes, smart clients,” a more accurate framing might be: intermediaries often do more harm than good when trying to help.

This principle has been on my mind frequently over the past few years, especially as I’ve watched organizations—large and small—attempt to integrate generative AI into their data platforms.

In the world of data platforms, Generative AI is just the latest telling of the same story:

Every few years, a new tool emerges—spreadsheets, data cubes, big data, data warehouses, document databases, dashboards, graph databases, machine learning, notebooks, deep learning—all promising fresh insights by either uncovering new signals or expanding access to data.

A defining trait of a strong data platform is its ability to adapt gracefully to these kinds of shifts.

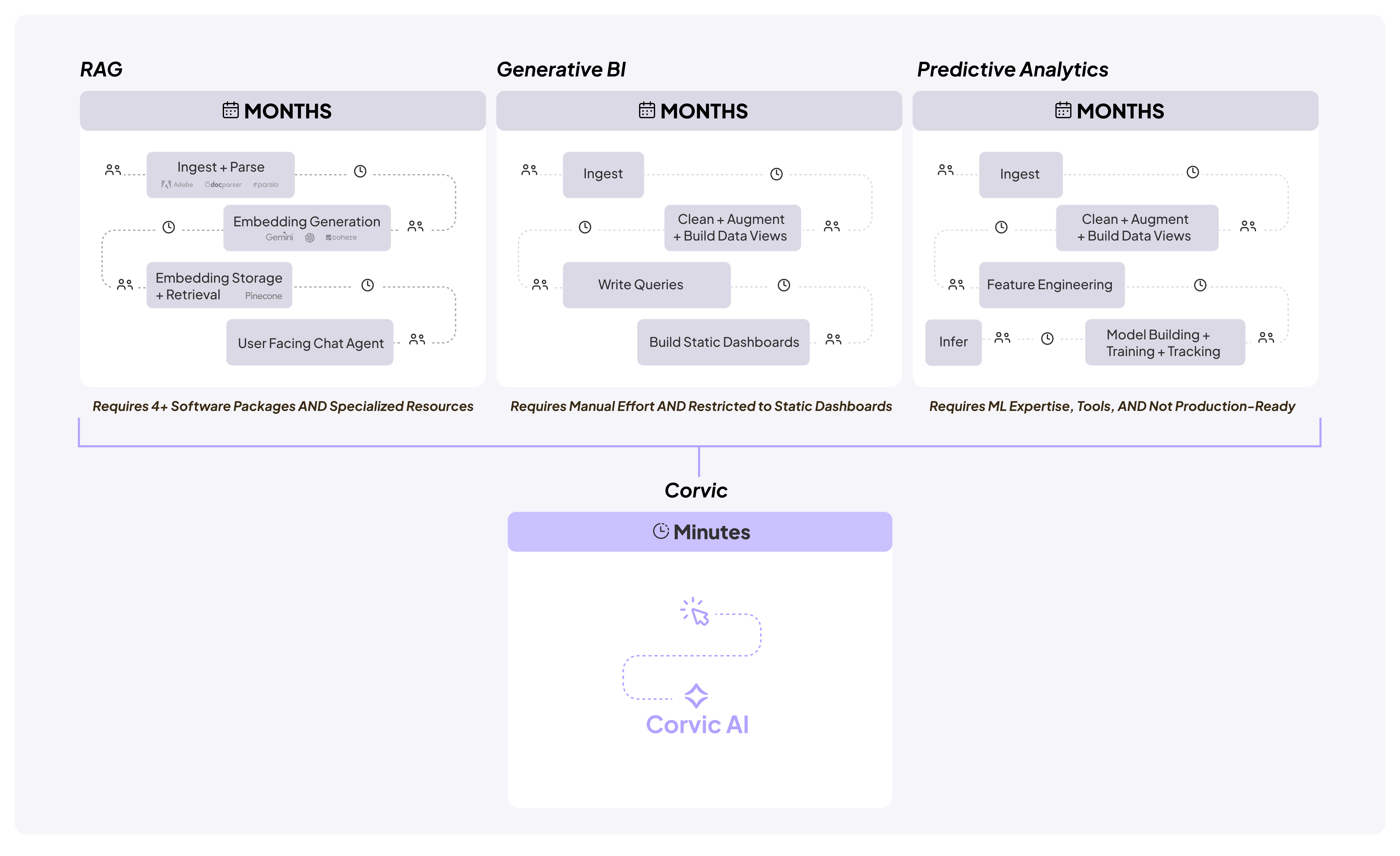

So why do organizations with mature data platforms still struggle to productionize a reliable customer service agent, integrate chemical catalogs for drug discovery, or even search a few thousand PDFs of architectural diagrams?

The reality is that while these platforms appear general-purpose, they’re often tightly tailored to past problems. Any deviation—new data types, unfamiliar workflows, or novel use cases—requires re-architecture. And because data platforms are central to organizational workflows, even small changes ripple across teams, turning simple ideas into multi-quarter initiatives.

Data Platforms Don’t Solve Your Data Problems:

Traditional data platforms provide tools to extract value and enable applications, but much of the heavy lifting is still left to you.

You were given the materials to build a castle, but ended up constructing a tomb—and now you’re paying to maintain it

But it doesn’t have to be this way ⚠️

The real value of data comes from digitization (e.g., parsing PDFs) and integration (e.g., linking those PDFs to your product tables). In most meaningful use cases, this integration still requires human insight—because only subject matter experts can distinguish correlation from causation.

To Each Their Own:

At Corvic AI, we’re building a platform that makes your data work for you—whether you're a global enterprise or a lean team. We let you focus on what’s unique to your domain (what a chemist needs versus a supply chain leader) while we handle what’s common to all data applications:

How to correlate across formats, scale reliably, productionize into services, and explain results clearly.

If your data platform makes it hard to ingest new data types or combine sources: It’s drag not lift.

If it limits subject matter experts from working directly with data: It’s hollow—not empowering.

If it forces you to take a stance on irrelevant implementation details: It’s a millstone, not a flywheel.

Generative AI is just a stress test of your data strategy ✨

If you’re struggling, ask yourself: What is your data infrastructure really solving for you?