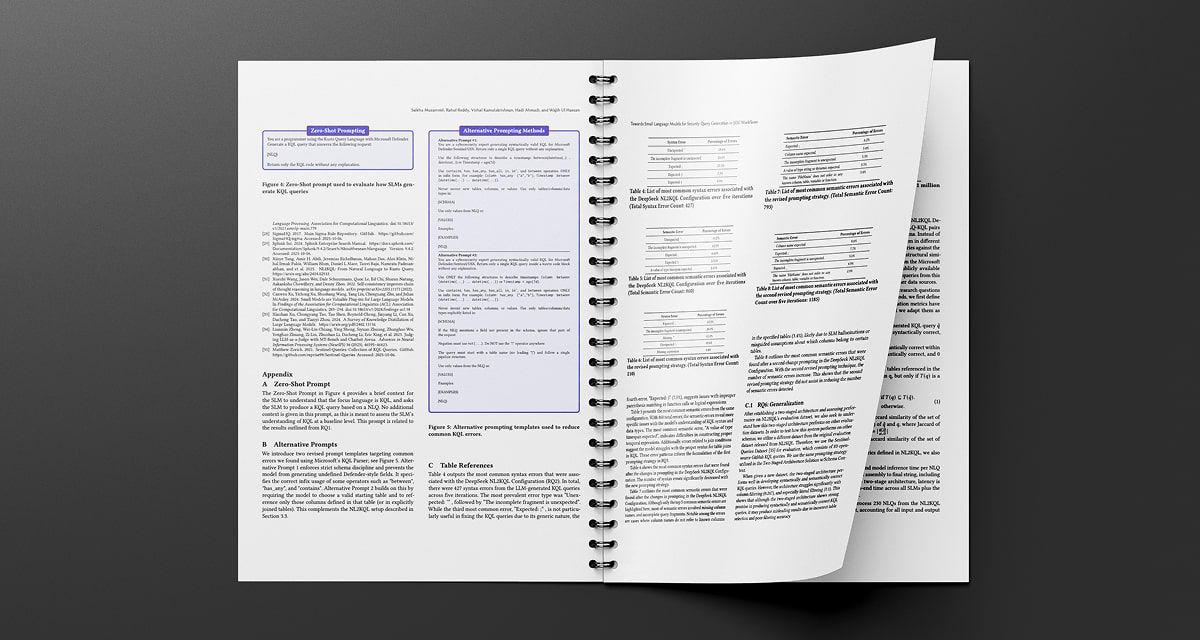

New Research: Small Language Models for SOC Query Generation at Lower Cost

Security Operations Centers (SOCs) are drowning in telemetry and alerts, and analysts often rely on Kusto Query Language (KQL) to investigate threats. Writing correct KQL takes specialized expertise, which becomes a scaling bottleneck for SOC teams.

In a new paper, Corvic AI’s Hadi Ahmadi and collaborators with the DART Lab at the University of Virginia explore whether Small Language Models (SLMs) can deliver accurate, cost-effective natural-language-to-KQL translation for real SOC workflows.

Paper snapshot

Title: Towards Small Language Models for Security Query Generation in SOC Workflows

Authors: Saleha Muzammil, Rahul Reddy, Vishal Kamalakrishnan, Hadi Ahmadi, Wajih Ul Hassan

TL;DR

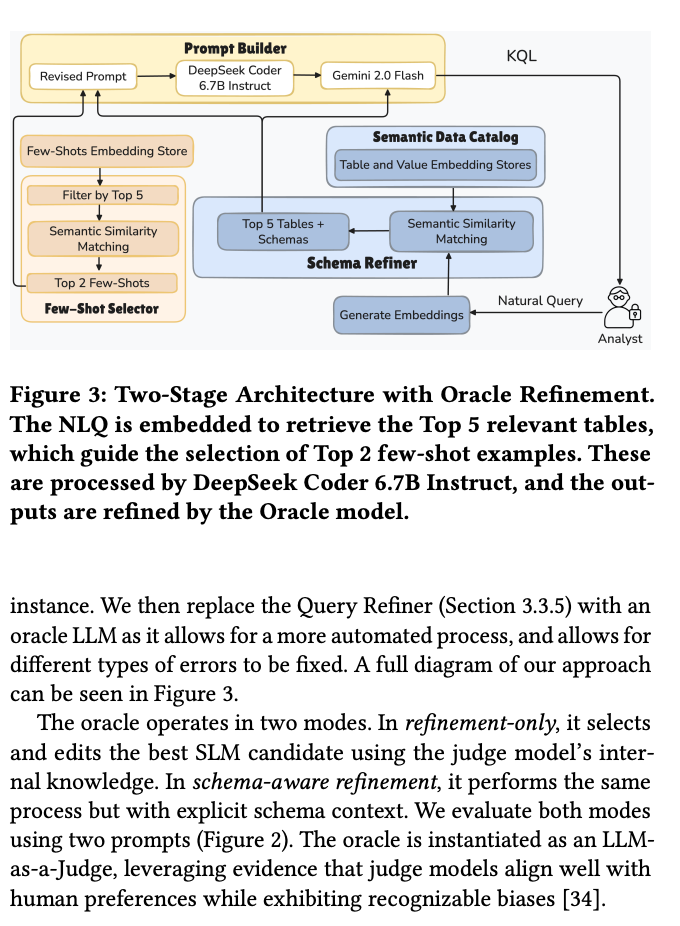

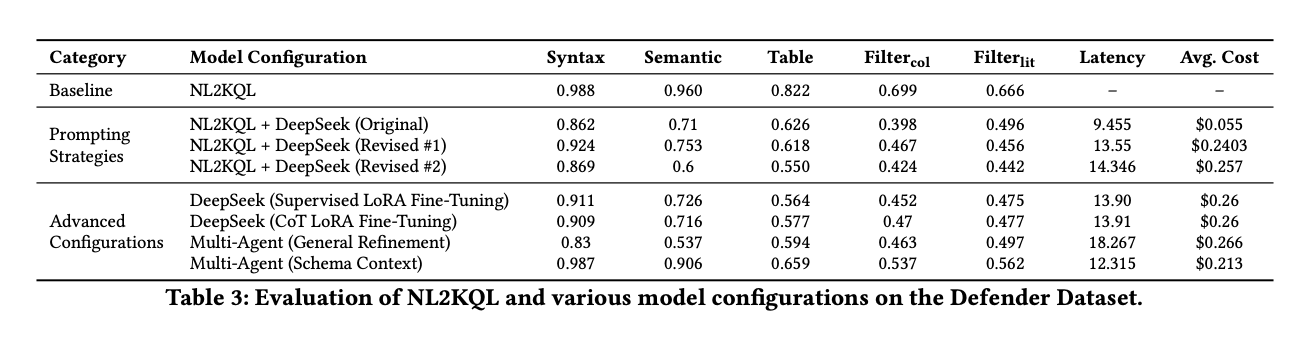

The paper proposes a practical framework combining prompt improvements, lightweight fine-tuning, and a two-stage architecture (SLM generation + low-cost LLM judging) to produce KQL with strong syntax and semantic accuracy, while reducing token cost significantly.

Key takeaways

- SLMs can be viable for NL → KQL when you add targeted prompting and lightweight retrieval.

- A two-stage “SLM + cheap LLM judge” improves schema-aware selection and overall quality.

- Results show high accuracy on Microsoft’s NL2KQL Defender dataset and generalization to Microsoft Sentinel, with up to 10× lower token cost than GPT-5 (as reported in the paper).